Earlier this month Red Hat started publishing Open Vulnerability and Assessment Language (OVAL) definitions for Red Hat Enterprise Linux security issues and today we obtained official compatibility. But what are these definitions, how do you use them, and why are they important?

One of the goals of Red Hat Enterprise Linux is to maintain backward compatibility of the packages we ship where possible. This goal means making sure that when we release security updates to fix vulnerabilities that we include just the security fixes in isolation, a process known as backporting. Backporting security fixes has the advantage that it makes installing updates safer and easier for customers, but has the disadvantage that it can cause confusion to people unfamiliar with the process who try to use the version number of a particular piece of software to determine it's patch status.

In 2002, Red Hat started publishing Common Vulnerability and Exposures (CVE) vulnerability identifiers on every security advisory in order to make it easy to see what we fixed and how. Customers need only know the CVE identifiers for the vulnerabilities they are interested in and can then find out quickly and easily which of our updates addressed that particular vulnerability. CVE is now used on security advisories from nearly all the major vendors.

Red Hat has a single common mechanism for keeping systems up to date with security errata, the Red Hat Network. The Red Hat Network looks at a customers machines to determine which updates are required and gives anything from a customised notification that an update is available through to automated installation. Third party patch auditing tools don't have such an easy time figuring out what up dates are required: they have to maintain their own list of Red Hat package versions against vulnerability names. As this list is different for each operating system version from each potential vendors, these tools are prone to many errors and lag behind our updates.

We've also found customers that query the Red Hat Network errata pages directly to gather information about our security advisories and put them into a format they can integrate with their own processes. Many customers take feeds of vulnerability data, usually in some XML format, from third party security vulnerability companies.

MITRE recognised both of these issues a number of years ago when they founded the Open Vulnerability and Assessment Language project, OVAL in 2002. The aim of OVAL is to provide a language for defining how to test for vulnerabilities and system configuration errors in an open and cross-platform manner. Red Hat was a founding board member of the OVAL project as part of our overall commitment to security quality.

So Red Hat now publishes OVAL 5 definitions for our Red Hat Enterprise Linux 3 and 4 security advisories. Each security advisory gets a separate XML OVAL file which defines the steps needed to test if an update is required for the target system. In an ideal world every Red Hat Enterprise Linux system would be consuming every update from Red Hat Network automatically, but for those that don't or where systems have been disconnected for some time, these definitions can help determine the patch status. In addition, these definitions contain selected info rmation from our advisories which can be combined with vulnerability feeds from third parties.

Red Hat OVAL patch definitions contain:

- A link to the original advisory

- CVE references for all the vulnerabilities fixed by the advisory

- References into our public bug tracking database

- The severity of the advisory

- A short description abstract taken from the advisory text

- For each Enterprise Linux version, a list of the packages and versions required to determine if the update is required

The actual OVAL definitions themselves are available from https://www.redhat.com/oval/ and are public within a couple of hours of an advisory being pushed to the Red Hat Network. OVAL definitions for all previous Red Hat Enterprise Linux 3 and 4 advisories are also available. At present we do not ship OVAL tools such as a definition interpreter, but there are severalopen-source and commercial OVAL-compatible tools available.

For the future we encourage other vendors to publish definitive OVAL definitions for their products too, and we hope to work on compatibility testing with other operating system and tool vendors.

More information about the make-up of the OVAL patch definitions can be found at the MITRE OVAL site. An FAQ about our implementation and where to contact us with comments or questions is also available.

Just back from my two presentations and I've uploaded the final versions (which replace the ones distributed on the conference CD).

- Security Vulnerability Management (PDF, 1Mb)

- A year of Red Hat Enterprise Linux 4 (PDF, 1Mb)

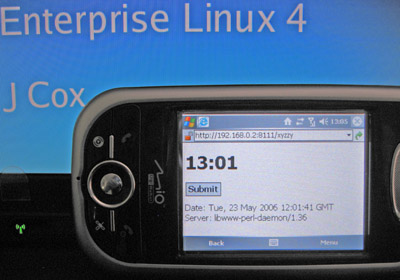

When I give presentations I have to find something to display the time (since I never wear a watch), somewhere to put some speaker notes down (since I sometimes forget a useful point), and then I keep knocking it all over everytime I go to the laptop to hit space to get the next slide. I'd quite like to use the laptop display to display my speaker notes and a clock, but OpenOffice doesn't support doing that yet.

I recently changed to using a smartphone to save carrying around and having to recharge lots of gadgets. I bought a Mio A701 which is a nice phone, PDA, and has GPS in one package. Since the Mio also has bluetooth I thought that for my presentations at the Red Hat Summit next week it would be nice to use the PDA to control the presentation, watch my time, and give me any speaker notes.

The smartphone runs Windows Mobile 5, unfortunately and I wanted to set something up quickly and without much effort. I don't mind writing apps for it, but I'd rather avoid it. So my first thought was to use vnc, but the vnc client on the pocketpc wasn't great and kept crashing, and I'd have to create some app to interface with OpenOffice anyway. Once OpenOffice supports multiple displays it may be more useful to revisit doing this via vnc.

My laptop runs Fedora Core 5 with a MSI bluetooth USB dongle plugged in.

Step 1: Get the phone talking to the laptop

This should have been the easiest step, but took an hour to get working right as I originally struggled getting the phone to connect to a 'serial port' service. The commands below were sufficient to advertise a 'dial up network' service and have pppd handle the connection. I didn't bother setting up any IP forwarding as I don't need the phone to be able to use the laptop as a way to get generic network access.

/sbin/service bluetooth start

sdptool add --channel=2 DUN

dund -u --listen --channel=2 --msdun noauth 192.168.1.1:192.168.1.2 \

crtscts 115200 ms-dns 192.168.1.1 lock

Then on the Windows Mobile I added a new connection, selected "bluetooth"

modem, created a new partnership with the laptop DUN service, any

phone number, any username and password (to stop it prompting later),

advanced to remove the "wait for dial tone" option. If you're doing this

from scratch you'll need to play with settings in /etc/bluetooth/hcid.conf

to make sure you set up a PIN for pairing and so on.

Once this is done using the browser on the phone with a URL of https://192.168.1.1/ causes it to connect, pppd starts, and the phone happily can connect to the web server on the laptop. If you want DNS working you'll need to mess with the dns IP above or make sure your laptop DNS server is set up to accept connections on that interface. So far so good.

Step 2: Control the presentation

The next step was to be able to control the presentation. I couldn't see any nice way to remotely control OpenOffice.org, so a colleague suggested finding something that used the xtest extension just to inject keystrokes. the X11::GUITest perl module on CPAN does the job perfectly. So I hacked up a quick perl script you run as your local user that acts as a web server and on certain requests will inject a space character into whatever has focus.

Step 3: Speaker Notes

Next step is to get the mini perl webserver to display my speaker notes as well as the link to the next slide, although, to be honest, I could probably have committed the notes to memory in the time it took to set this all up.

![]() the trivial little perl script

the trivial little perl script

Remember all those reports which compared the number of security vulnerabilities in Microsoft products against Red Hat? Well researchers have just uncovered proof and an admission that Microsoft silently fix security issues; in one case an advisories states it fixes a single vulnerablity but it actually fixes seven.

Whilst you could perhaps argue that users don't really care if an advisory fixes one critical issue or ten (the fact it contains "at least one" is enough to force them to upgrade), all this time the Microsoft PR engine has been churning out disingenuous articles and doing demonstrations based on vulnerability count comparisons.

My home automation tablets use Perl/Tk as their user interface which makes coding and prototyping really quick and easy and works on both Linux and Windows platforms.

I use ZoneMinder for looking after the security cameras around the house and had

set up the tablets to be able to display a static image from any camera

on demand. But what I really wanted to do was to let the tablets display

a streaming image from the cameras.

I use ZoneMinder for looking after the security cameras around the house and had

set up the tablets to be able to display a static image from any camera

on demand. But what I really wanted to do was to let the tablets display

a streaming image from the cameras.

ZoneMinder is able to stream to browsers by making use of the Netscape server push functionality. In response to a HTTP request, ZoneMinder will send out a multipart replace header, then the current captured frame as a jpeg image, followed by a boundary string, followed by the next frame, and so on until you close the connection. It's perhaps not as efficient as streaming via mpeg or some other streaming format, but it's simple and lets you stream images to browsers without requiring plugins.

So I wrote the quick Perl/Tk program below to test streaming from ZoneMinder. It does make some horrible assumptions about the format of the response, so if you want to use this with anything other than ZoneMinder you'll need to edit it a bit. It also assumes that your network is quite good between the client and ZoneMinder; the GUI will become unresponsive if the network read blocks.

My first attempt ran out of memory after an hour -- I traced the memory leak to Tk::Photo and it seems that you have to use the undocumented 'delete' method on a Tk::Photo object otherwise you get a large memory leak. The final version below seems to work okay though.

# Test program to decode the multipart-replace stream that

# ZoneMinder sends. It's a hack for this stream only though

# and could be easily improved. For example we ignore the

# Content-Length.

#

# Mark J Cox, mark@awe.com, February 2006

use Tk;

use Tk::X11Font;

use Tk::JPEG;

use LWP::UserAgent;

use MIME::Base64;

use IO::Socket;

my $host = "10.0.0.180";

my $url = "/cgi-bin/zms?mode=jpeg&monitor=1&scale=50&maxfps=2";

my $stop = 0;

my $mw = MainWindow->new(title=>"test");

my $photo = $mw->Label()->pack();

$mw->Button(-text=>"Start",-command => sub { getdata(); })->pack();

$mw->Button(-text=>"Stop",-command => sub { $stop=1; })->pack();

MainLoop;

sub getdata {

return unless ($stop == 0);

my $sock = IO::Socket::INET->new(PeerAddr=>$host,Proto=>'tcp',PeerPort=>80,)

;

return unless defined $sock;

$sock->autoflush(1);

print $sock "GET $url HTTP/1.0\r\nHost: $host\r\n\r\n";

my $status = <$sock>;

die unless ($status =~ m|HTTP/\S+\s+200|);

my ($grab,$jpeg,$data,$image,$thisbuf,$lastimage);

while (my $nread = sysread($sock, $thisbuf, 4096)) {

$grab .= $thisbuf;

if ( $grab =~ s/(.*?)\n--ZoneMinderFrame\r\n//s ) {

$jpeg .= $1;

$jpeg =~ s/--ZoneMinderFrame\r\n//; # Heh, what a

$jpeg =~ s/Content-Length: \d+\r\n//; # Nasty little

$jpeg =~ s/Content-Type: \S+\r\n\r\n//; # Hack

$data = encode_base64($jpeg);

undef $jpeg;

eval {

$image = $mw->Photo(-format=>"jpeg",-data=>$data);

};

undef $data;

eval {

$photo->configure(-image=>$image);

};

$lastimage->delete if ($lastimage); #essential as Photo leaks!

$lastimage = $image;

}

$jpeg .= $1 if ($grab =~ s/(.*)(?=\n)//s);

last if $stop;

$mw->update;

}

$stop = 0;

}

In March 2005 we started recording how we first found out about every security issue that we later fixed as part of our bugzilla metadata. The raw data is available. I thought it would be interesting to summarise the findings. Note that we only list the first place we found out about an issue, and for already-public issues this may be arbitrary depending whoever in the security team creates the ticket first.

So from March 2005-March 2006 we had 336 vulnerabilities with source metadata that were fixed in some Red Hat product:

111 (33%) vendor-sec 76 (23%) relationship with upstream project (Apache, Mozilla etc) 46 (14%) public security/kernel mailing list 38 (11%) public daily list of new CVE candidates from Mitre 24 (7%) found by Red Hat internally 18 (5%) an individual (issuetracker, bugzilla, secalert mailing) 15 (4%) from another Linux vendors bugzilla (debian, gentoo etc) 7 (2%) from a security research firm 1 (1%) from a co-ordination centre like CERT/CC or NISCC(Note that researchers may seem lower than expected, this is because in many cases the researcher will tell vendor-sec rather than each entity individually, or in some cases researchers like iDefense sometimes do not give us notice about issue prior to them making them public on some security mailing list)

Last year I wrote about how both Red Hat and Microsoft shipped the third party Flash browser plugin with their OS and whilst we made it easy for users who were vulnerable to get new versions, Microsoft made it hard. With another critical security issue in Flash last week, George Ou has noticed the same thing.

Just finished the security audit for FC5 - For 20030101-20060320 there are a potential 1361 CVE named vulnerabilities that could have affected FC5 packages. 90% of those are fixed because FC5 includes an upstream version that includes a fix, 1% are still outstanding, and 9% are fixed with a backported patch. Many of the outstanding and backported entries are for issues still not dealt with upstream. For comparison FC4 had 88% by version, 1% outstanding, 11% backported.

What defines transparency? The ability to expose the worst with the best, to be accountable. My risk report was published today in Red Hat Magazine and reveals the state of security since the release of Red Hat Enterprise Linux 4 including metrics, key vulnerabilities, and the most common ways users were affected by security issues.

On Monday a vulnerability was announced affecting the Linux kernel that could allow a remote attacker who can send a carefully crafted IP packet to cause a denial of service (machine crash). This issue was discovered by Dave Jones and allocated CVE CVE-2006-0454. As Dave notes it's so far proved difficult to reliably trigger (my attempts so far succeed in logging dst badness messages and messing up future ICMP packet receipts, but haven't triggered a crash).

This vulnerability was introduced into the Linux kernel in version 2.6.12 and therefore does not affect users of Red Hat Enterprise Linux 2.1, 3, or 4. An update for Fedora Core 4 was released yesterday.